Aberration Lab

Participatory Light Art Generator (Social Distancing Ver.)

Experimental and unpredictable at its core, Aberration Lab is a participatory art experience fueled by creativity & connectedness during a time of social distancing.

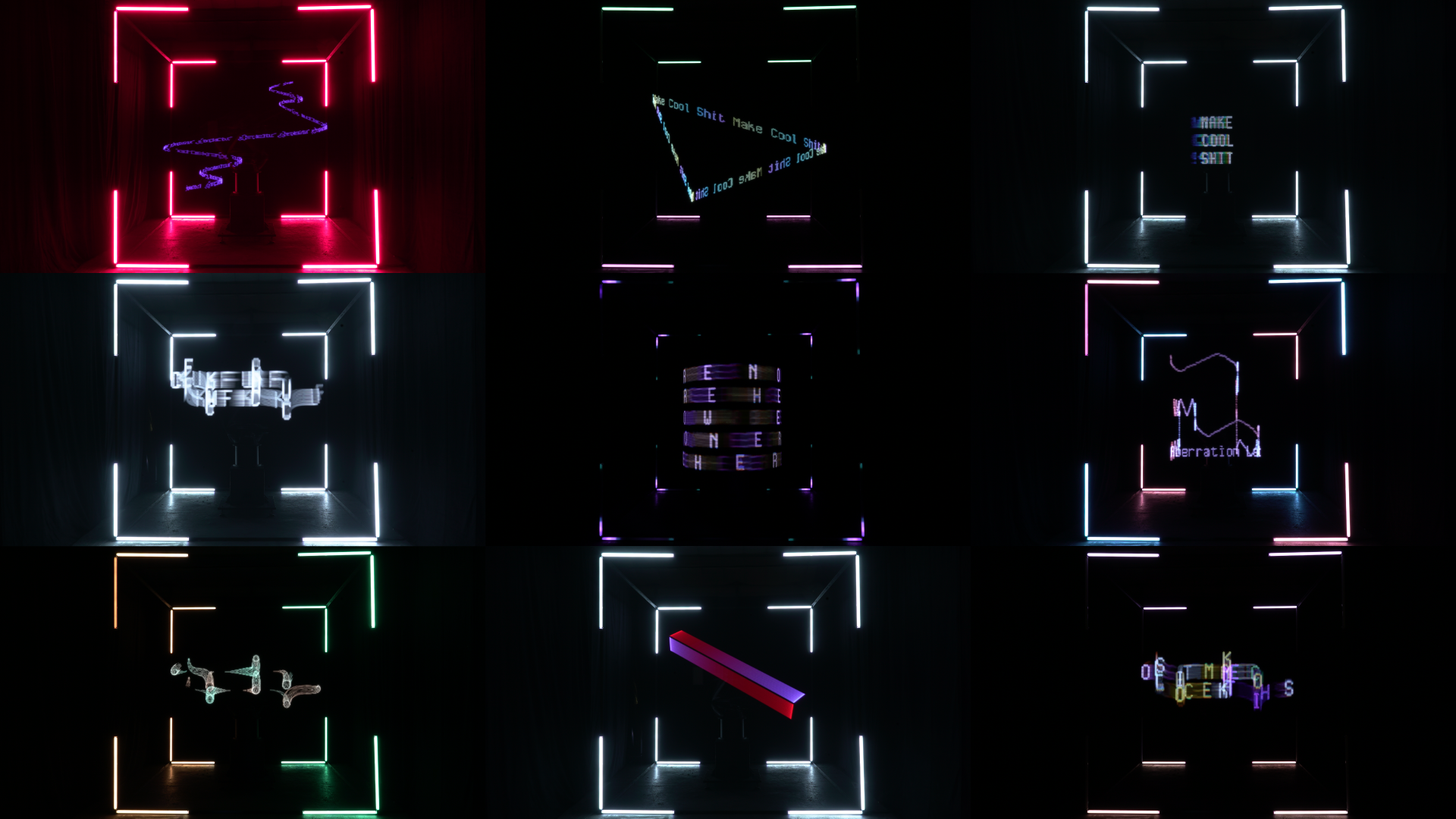

Aberration Lab alchemizes user-submitted tweets from all around the world into unique long exposure light paintings. The paintings are drawn in real time, at our LA warehouse studio, by one of our Kuka robots. Framed by a cube-like structure, the paintings rely on a custom-engineered light panel, artfully choreographed robot movements, and generative visuals to make each paintings come alive.

A camera captures the entire process live on Twitch, bringing people together in a communal live-stream where they can watch the robot draw light paintings, or perform choreographed interstitial dances.

Interaction

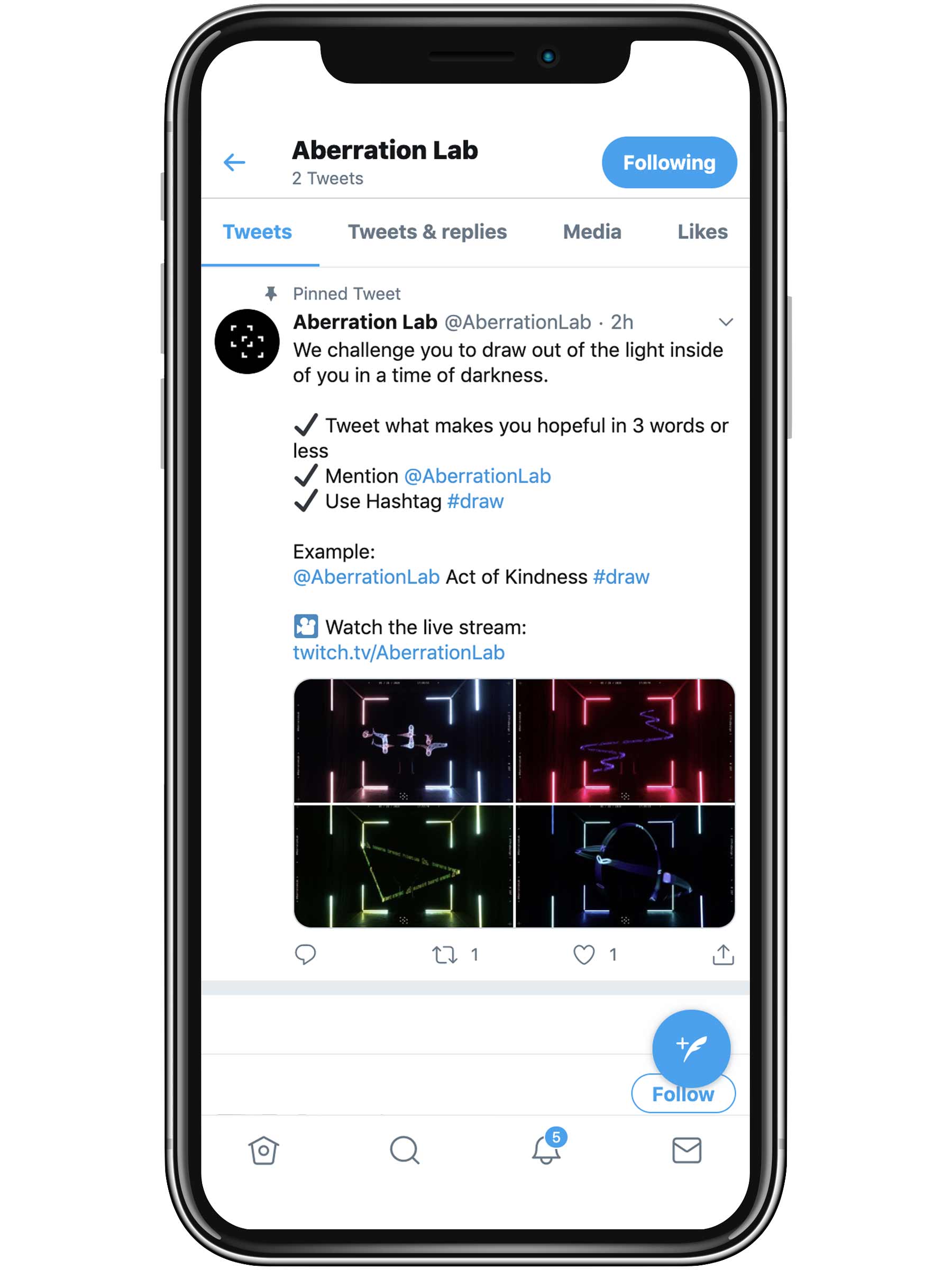

Users participate in the experience through AberrationLab’s Twitter (@AberrationLab) by tweeting a response with the following:

1 / Tweet what makes you hopeful in 3 words or less.

2 / Use the hashtag #draw

Example: Acts of Kindness #draw

Participants receive a confirmation tweet from @AberrationLab directing them to a Twitch livestream where they can see their message transformed into a long exposure light painting. At Twitch, participants can watch the creation of other hope-filled messages and listen to generative audio based on the robot’s movements, and they are given access to commands that enable on-the-fly drawing requests and control lighting in the physical environment.

Sound Design

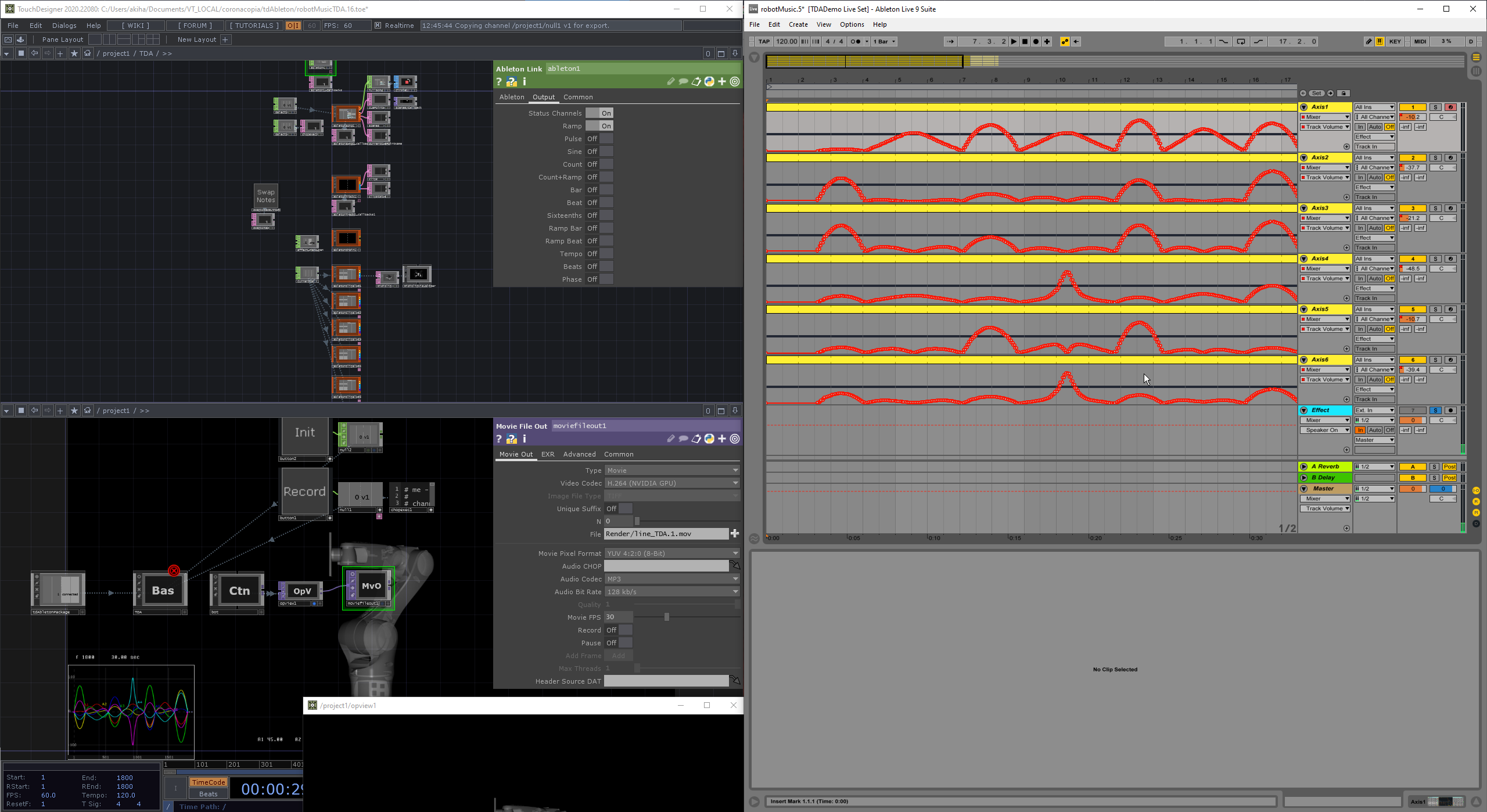

The audio soundscapes are made from a combination of processed audio samples and live data taken from the robot’s movements. By analyzing the speed of the robot’s movements, we can trigger different notes to create melodies and harmonies that change over time.

Take-Away

Once the robot is finished painting, users receive a static image and animated GIF of their artwork that is stamped with uniquely identifying graphical metadata (username, time, date, painting #) that they can retweet or share on other social media platforms.

Programming

TouchDesigner acts as a central hub, taking various inputs to control the light panel, environmental lights, camera, and generative audio. On the live stream, a light trail effect is added to visualize the long exposures as they are drawn in real time.

Robotics

Using an industrial robot arm, the light paintings can be drawn with sub-millimeter accuracy to achieve unique forms and shapes.

Scope

- Robotics

- Creative Direction

- Audio / Visual Production

- Custom Fabrication

- Design

- Engineering

- Experiential Design

- Generative Content

- Interactive Installation

- Narrative & Strategy

- R&D / Prototyping